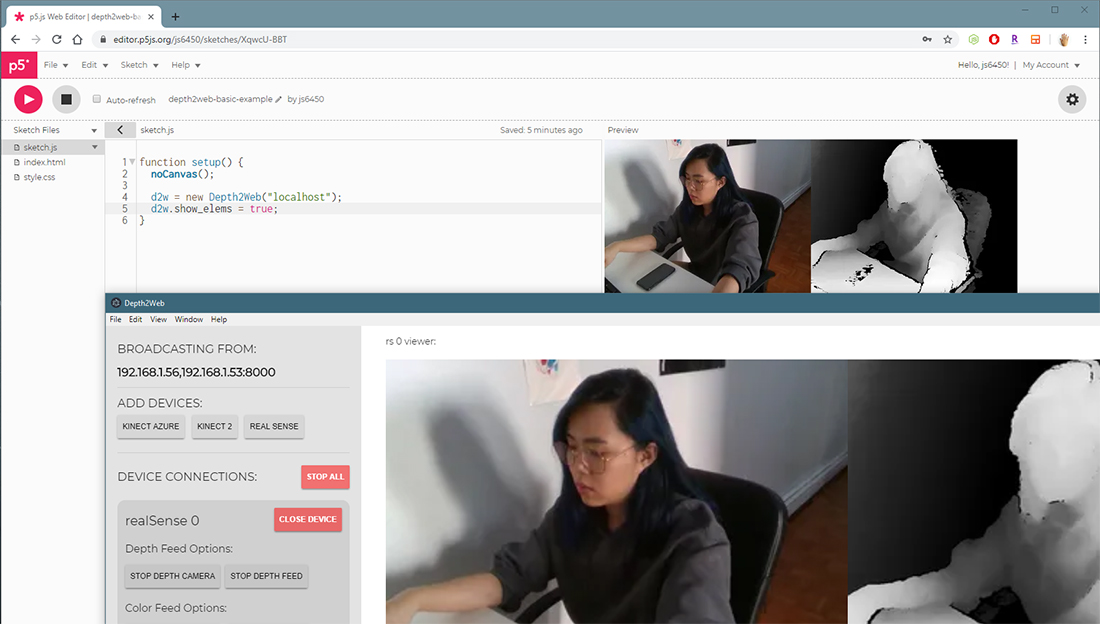

Depth2Web is a device agnostic desktop application that sends depth feed to the web. It can be used to stream raw depth feed or analyzed data of the feed; constrained only by threshold and joints detected. The platform currently supports various versions of Intel RealSense and versions of Microsoft Kinect sensors and the platform standardizes data from the different depth cameras. All standardized feed from depth cameras are sent via peer network as stream objects. The application aims to encourage more people to make web applications for users to interact beyond the mouse and keyboard. Depth2Web provides an opportunity for artists, creators and programmers to use depth and body motion as modes of web interaction by making the use of depth cameras more approachable and by supporting a large range of devices that can be used interchangeably.

PROJECT TYPE: solo project, open-source

SHOWCASED AT: ITP Thesis 2020

CODE: github

TECHNICAL DETAILS: Depth2Web is a chromium desktop application built using electron. The backend server is built using node.js and each of the devices supported (versions D4XX of Intel RealSense and Microsoft Kinect 1, 2 and Azure) are created as a child class of an overarching Device class. The communication between devices and renderers are done via IPC module of electron and the feed is steamed using peer network over webRTC as stream objects.

The existing depth feed streaming tools such as Kinectron by Lisa Jamhoury and DepthKit focus on delivering data from specific devices. Kinectron supports streaming of data from Microsoft Kinect 2 and Kinect Azure SDK that include color feed, depth feed and joint data (limited to Windows platform). DepthKit supports versions of Intel RealSense and Microsoft Kinect 2 and Kinect Azure, but requires users to specify what device they are using and cannot be used interchangeably, because data from the RealSense and Kinect devices are different. Although DepthKit allows users to stream feeds from both Intel RealSense and Microsoft Kinect devices, it is a commercial software.

Using depth cameras on the web has a lot of constraints. It is not a device that is included in a regular laptop and it also requires installation of SDK (Software Development Kit) for specific devices. It is also difficult to find free and open source software that streams the feed from these devices to the web. Depth2Web aims to solve some of these problems and lower the barrier of entrance of using depth cameras for web-use.

There are three main points that differentiate Depth2Web from other depth feed streaming software:

Device Agnostic Software: Depth2Web is structured for all instances of supported depth cameras created under the parent Device class. The Device class has the shared functions of all devices, such as those that return color feed, depth feed and the analyzed data of the feeds such as constrained threshold and joint data. The specific functions pertaining to each of the supported modules live under a class with the name of the device (Kinect2, RealSense, KinectAzure). The devices are modularized so that it allows integrating more devices to the software conveniently.

Open-Source Software: Because of the device-agnostic structure of the software, it is relatively easy to create a module to support other depth devices. With detailed documentation of the platform and a contribution guideline, it is the hope that the community will add to the software to allow more depth devices to be supported.

Multi-camera Support: Depth2Web supports streaming of multiple devices from a single local computer. This means that even though different models of devices are used, they can all be uniform in size and resolution to be streamed to the web. It can also allow the devices to send different kinds of feeds.